Trusted AI: Bot Disclosure, Privacy & Best Practices

The use of and trust in AI go hand-in-hand. As financial institutions harness the power of conversational AI to transform self-service, it’s important to prioritize advanced security and privacy controls to ensure its success.

A key part of the trust equation is transparency. How the interface is designed and what information is shared is critical to helping people engage with the technology, and the developing legislation around artificial intelligence (AI) can provide guardrails for that design.

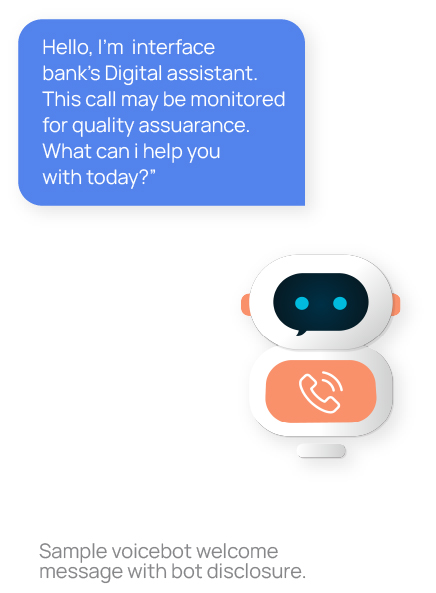

Voicebot and chatbot disclosure

It is unlawful for a financial institution to use a bot to communicate or interact online with a person in California without disclosing that the communication is via a bot. In order to facilitate fair disclosure, there are a few best practices to keep in mind:

- Create a persona for your bot. Give it a name, create an avatar, but be sure to avoid using imagery that could potentially mislead the person on the other side of the conversation into believing that the interaction is with a human.

- Set up your experiences so that the dialogue welcomes the user and declares that the communication is, in fact, with a bot: “Hi! I’m Simon, your virtual assistant!”

- Educate members on the bot experience – its purpose, the things it can help with, and how to access – and share across all touchpoints where members or customers engage, including your website, social media, and in branch locations.

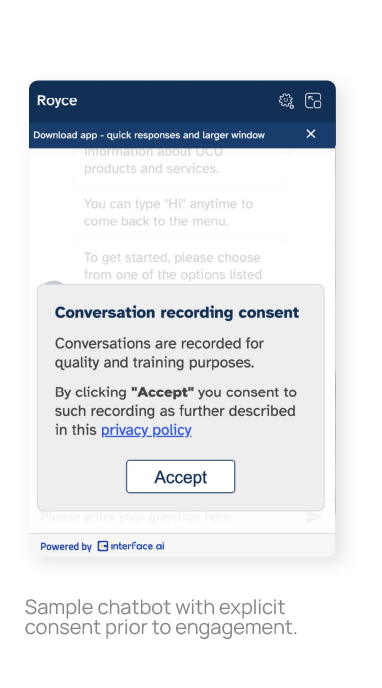

Privacy and consent capture with conversational AI

Several U.S. States have enacted data privacy laws to provide consumers with more choice over how companies acquire and utilize their personal data. California led the charge with the California Consumer Privacy Act (CCPA) and the California Privacy Rights Act (CPRA).

A number of states now have comprehensive data privacy laws in place, including Texas, Virginia, Colorado, and New Jersey. Such laws grant rights to individuals pertaining to the collection, use, and disclosure of their personal data.

Not all legislation is the same and depending on the location of your members or customers different rules may apply. But in order to adhere to privacy regulations, there are a few general best practices to keep in mind:

- Where voice assistance is provided, such as a member service or customer contact center, include a declaration such as, “This call may be monitored for quality assurance.”

- Where chat conversations are recorded for quality and training purposes, provide an explicit acceptance flow for the user.

- Post a conspicuous link to your Privacy Policy on your digital channels and as part of any explicit permission flows.

Data Security

Conversational AI technology, like interface.ai, is powered by natural language processing (NLP) and machine learning, using vast amounts of data to learn from every interaction. interface.ai only processes and stores conversational log data generated through the products for the purpose of ensuring the delivery of the service. All interactions stored within the systems are anonymized and personally identifiable information (PII) is removed before storing, masking and redacting sensitive information shared with the assistant.

Certifications

interface.ai performs a variety of audits and assessments to provide our customers and our team with independent, third-party assurance that we are adhering to our commitment to protect our systems and our customer’s data.

SOC II Type 2

Industry recognized audit performed on an annual basis. The audit certifies that we have appropriate controls that meet AICPA Trust Service Criteria relevant to security, availability, confidentiality

CSA STAR Level 1

Certified under Cloud Security Alliance’s STAR Level 1. As a Cloud Security Alliance STAR registrant, interface.ai’s security practices are available for immediate review, no need to send us a survey.

AI Insights for Credit Union & Community Bank Leaders

Join the monthly newsletter for all the latest industry updates